Key steps

When you’re ready to produce your company AI guidelines, here are some steps you can follow to plan, create, and implement your protocols.

There are some great guidelines out there for this process. Below are the two extremes from corporate governance to startups but follow a similar pattern. Below is a summary of a path that you may be able to follow.

- https://www.thecorporategovernanceinstitute.com/insights/guides/creating-an-ai-policy

- https://www.hubspot.com/startups/ai-usage-policy

Establish a working group of broad stakeholders

Create a group of people to lead the development of your AI policy, principles and usage standards. This working group should include leadership and may even include board members, executives, and department heads, as well as relevant technical experts. They should have a foundational understanding of AI and its benefits and risks (or may require this education as the first step). You need to gather diverse insights and ensure the policy addresses all relevant aspects.

Define AI, Purpose & Scope

Create a common understanding of what is AI. It’s important that you create a definition of AI that suits your organisation as this is the foundation for an AI policy, principles or standard so your teams are clear on what is considered AI and what is not.

Identify the ways in which you will use AI in your operations or develop AI technologies. Define the overarching goals of AI use within your organisation and the boundaries of its application (including what you won’t do).

Within the scope, you should be clear on the components of what is required – for example, policies, principles, standards, and/or procedures. This will also help determine who is required to help.

Establish your core AI principles

Determine how your company’s core principles will relate to AI use and development. These principles will inform the ethical foundation of your AI usage policy. Some initial focus areas should be on autonomy, bias, security, and privacy.

Assess AI Risks and Compliance needs

Learn about the legislation that govern AI tools and ensure that your guidelines are compliant with these regulations. Work to draft legislation or guidance to design with future compliance in mind as much as possible.

Identify potential risks associated with AI usage and ensure compliance with existing policies, legal and ethical standards. Ethical principles that will guide the development and deployment of AI, such as fairness, transparency, and privacy.

There are some great frameworks out there and our preferred starting point is the EU AI Act categorises AI systems into four risk levels:

- Unacceptable Risk: Applications that considered a threat to people.

These comprise subliminal techniques, exploitative systems or social scoring systems are banned. Also prohibited are any real-time remote biometric identification systems (e.g. such as facial recognition) with some exceptions for law enforcement purposes.

These are banned, unless permitted in rare cases for law enforcement purposes or with a court order. - High Risk: These are AI systems that are subject to significant regulatory obligations – primarily products that meet safety laws and software used to manage human facilities or health.

This requires enhanced thresholds of diligence, initial risk assessment, and transparency. The technology itself will need to comply with certain requirements – including around risk management, data quality, transparency, human oversight, and accuracy. - Limited Risk: These are AI systems will have to comply with transparency requirements, copyright law, showing the content was generated by AI and designs to prevent it from generating illegal content.

- Minimal or No Risk: These AI systems likely have the least regulatory oversight.

There is also a potential category for Systemic Risk for high-impact general-purpose AI models like ChatGPT-4. This is inline with any system (banking, insurance or infrastructure).

Each risk level has corresponding regulatory requirements to ensure that the level of oversight is appropriate to the risk level. The Act adopts a risk-based approach, aiming to boost public confidence and trust in technology.

Set accountability

Create a structure for decision-making, accountability, and oversight of AI systems.

Determine who will be responsible for which stages of selection, use, development and monitoring of AI tools in your organisation. Create processes for reporting and governance so that your usage guidelines are followed as intended.

Design monitoring, control and review procedures

Establish procedures for monitoring AI performance and managing deviations from the policy. These procedures will enable you to monitor how your AI systems are performing and following your agreed policies. You should also regularly evaluate your policies to ensure they remain effective at mitigating risks and evolve with changes in processes and technology.

Plan & Implement

When the guidelines are complete, they need to be communicated to all stakeholders clearly, so all understand them (with a potential test). Provide training for employees on responsible AI usage and the implications of the AI policy. Given the impacts across most workforces, this could incorporate the full company as AI users with a focus group for AI designers or implementors.

Once this is done you should have a process to monitor and update the policy.

Develop best practice standards & training

Create standards for using AI while mitigating risk and balancing human and artificial intelligence appropriately. Outline key scenarios, for example customer risks, to help teams understand what is required.

What is out there today?

Google AI Principles

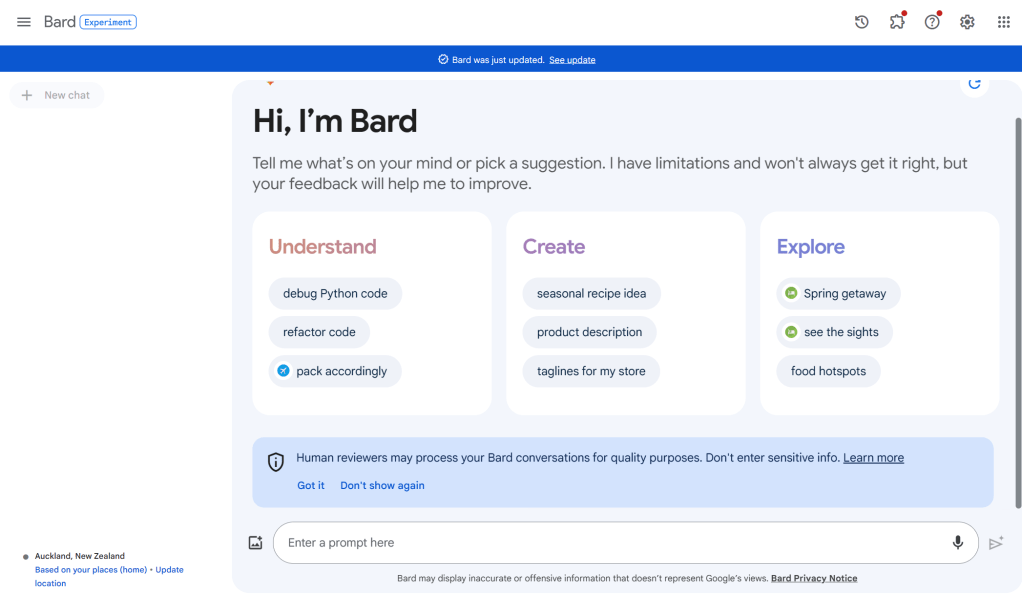

Google have a very clear set of principles outlined on their AI site: https://ai.google/responsibility/principles/

Below is a summary based on the website as at 23 March 2024. The structure is very easy to understand and read with broad outcomes that they want to focus on and also outline where they will not participate (including acknowledging this has and will change as they learn more).

What is allowed?

- Be socially beneficial.

- Avoid creating or reinforcing unfair bias.

- Be built and tested for safety.

- Be accountable to people.

- Incorporate privacy design principles.

- Uphold high standards of scientific excellence.

- Work to limit potentially

harmful or abusive applications.

What is not allowed?

- Cause or are likely to cause overall harm.

- Weapons or other technologies whose principal purpose is to cause injury.

- Surveillance violating internationally accepted norms.

- Contravenes widely accepted principles of international law and human rights.

- This list may evolve.

Google also publish their principles back to 2019, which have largely stayed consistent.

OECD AI Principles

Below is a summary based on the website (https://oecd.ai/en/ai-principles) as at 23 March 2024.

The OECD AI Principles promote use of AI that is innovative and trustworthy and that respects human rights and democratic values. The principles represent a common aspiration that can shape a human-centric approach to trustworthy AI.

Values-based principles

- Inclusive growth, sustainable development and well-being

- Human-centred values and fairness

- Transparency and explainability

- Robustness, security and safety

- Accountability

Recommendations

- Investing in AI R&D

- Fostering a digital ecosystem for AI

- Providing an enabling policy environment for AI

- Building human capacity and preparing for labour market transition

- International co-operation for trustworthy AI

As an organisation focussed on policy development, they are focussed more on the recommendations and actions that countries and policymakers should take. Their recommendations highlight areas that a company also needs to focus on around investment, delivering on a digital ecosystem, outlining policies and providing upskilling and transition for current team members.

Spark NZ AI Principles

Spark have committed and made their principles public which shows great leadership within NZ corporates. There policy is available here:

Spark NZ AI Principles (December 2023).pdf

Artificial Intelligence (AI) technologies are evolving and being deployed at scale. These

technologies have increasingly sophisticated capabilities, some of which can directly impact

people or influence their behaviours, opinions, and choices.

- Human centred

- Ethical design

- Diversity, inclusivity, and bias

- Safety and reliability

- Privacy

- Informed human decision making

- Explicability and transparency

Interestingly unlike Google, Spark do not outline what they will not do, more how they will be in line with their ethics and requirements.

Wrap Up

There are many resources available as a starting point for getting this underway at your company – you just need to start. Make sure that you engage broad stakeholders to give you great inputs on the future needs of your business to deliver on responsible AI (or no AI if that’s the business decision and risk appetite). We look forward to seeing many more companies in New Zealand publish their approach and share with their customers and key stakeholders for a more transparent future for us all.

You must be logged in to post a comment.